IZYAN HAZWANI HASHIM, FAHMI IBRAHIM, WONG GUAN MING

*Corresponding author : izy an@utm.my

“Machine learning (ML) is a type of artificial intelligence that enables systems to learn from data and improve their performance on tasks without explicit programming, by using algorithms to find patterns and make decisions.”

*Dr. Izyan Hazwani Hashim is a member of Nuclear & Radiation Physics Research Group (NuRP) and a Senior Lecturer in the Department of Physics, Faculty of Science, UTM.

Particle physics is a branch of high-energy physics that studies the fundamental particles that make up the universe and their interactions. These fundamental particles are the building blocks of all matter and energy, including protons, neutrons, electrons, and various subatomic particles such as quarks, leptons, and bosons. This field is crucial for understanding how the universe operates. However, one of its greatest challenges is the enormous amount of data generated by these physics experiments. In today’s world, big data processing plays a vital role in supporting scientific discoveries like those in particle physics. The innovative use of machine learning (ML) in particle physics experiments has significantly impacted how massive and complex data is analyzed, paving the way for deeper discoveries. For instance, experiments at CERN generate nearly one billion particle collision events per second! This is where machine learning (ML), a branch of artificial intelligence (AI), plays a key role in helping scientists filter and interpret the data.

How Machine Learning Aids Particle Physics

Particle collision experiments like those conducted at CERN are recorded using sophisticated detector systems. The data produced is far too vast for humans to analyze manually—akin to finding a needle in a haystack. ML acts like a “magnet” that makes this process easier, helping to extract important signals from large datasets. Moreover, ML is also used to design better detector technology. Before building actual detectors, scientists use machine learning models in simulations to prevent errors and save time and cost.

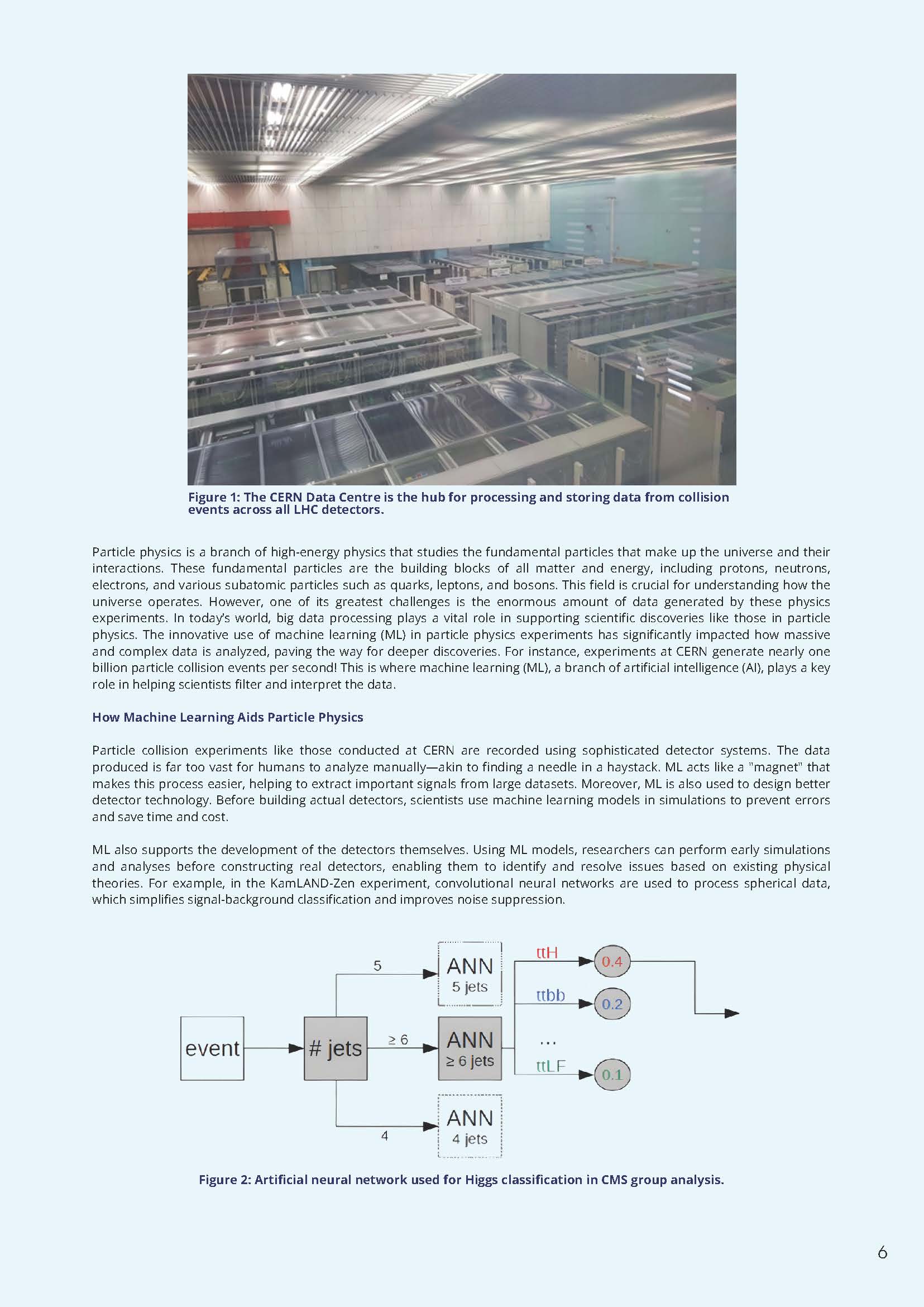

ML also supports the development of the detectors themselves. Using ML models, researchers can perform early simulations and analyses before constructing real detectors, enabling them to identify and resolve issues based on existing physical theories. For example, in the KamLAND-Zen experiment, convolutional neural networks are used to process spherical data, which simplifies signal-background classification and improves noise suppression.

Gradient Boosting Decision Tree (GBDT) algorithms like XGBoost, LightGBM, and CatBoost play a vital role in particle physics experiments to improve accuracy and efficiency in data analysis. These algorithms help classify data and separate critical signals from background noise in large datasets.

However, their implementation and techniques differ. In the COMET experiment, XGBoost is used for electron track classification. It employs regularization techniques in the loss function to manage overfitting—a common issue with large and complex data. Performance tests showed that XGBoost achieved around 80% background rejection and 86% signal retention. A nearest-neighbor GBDT model achieved 90% background rejection with the same signal retention, showing XGBoost’s strength in handling complex classifications with minimal kinematic data.

In the ATLAS experiment, LightGBM is used to study Higgs boson decay into charm quark pairs. This algorithm accelerates large-scale data analysis using optimized training speed. With faster training, good accuracy, and ability to handle large datasets—plus GPU support—LightGBM is ideal for complex data tasks. Results showed that using LightGBM with looser selection criteria improved background rejection by 14%, proving its effectiveness in particle data clustering.

In the LHCb experiment, CatBoost is applied for particle identification in RICH detectors, specifically classifying proton-kaon pairs. CatBoost’s use of random permutations and symmetric tree structures improves classification stability and efficiency. Performance tests showed that combining CatBoost with deep neural networks (DNNs) outperformed the basic ProbNN model in identifying particles. ProbNN is based on six single-layer binary artificial neural networks used as a baseline in LHCb. While all three GBDT algorithms share the same foundational principles and goals, each has distinct advantages depending on experimental needs.

The Impact of Machine Learning on Particle Physics and the World

Machine learning has revolutionized particle physics by simplifying the analysis of complex data. This technology enables scientists to make discoveries faster, such as understanding the origins of the universe. Interestingly, the same technologies are used in everyday life—like facial recognition in smartphones and weather forecasting. This demonstrates how physics and machine learning not only help us understand the universe but also improve human life. Thanks to ML, particle physics has become more advanced and exciting. It addresses major challenges in data analysis and paves the way for more sophisticated future innovations. From understanding fundamental particles to building new technologies, machine learning proves that collaboration between humans and machines is key to future scientific breakthroughs.